🤖custom model configs

By default, Loom offers a selection of models through Latitude's API, but it's possible to configure Loom to use any model endpoint url for generations.

using a custom model endpoint

model config settings

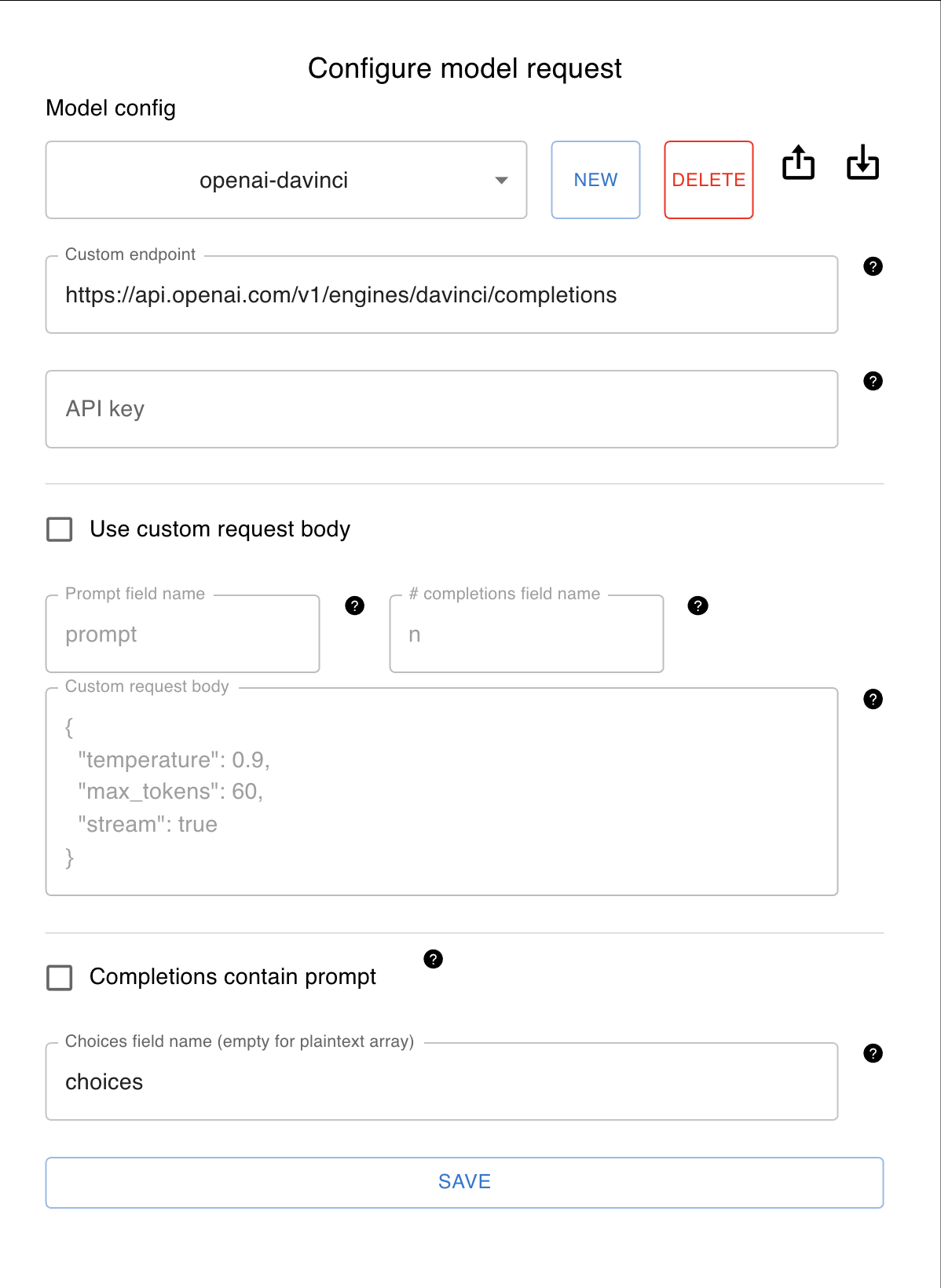

You can create model configs in Loom, which will be saved to your program state. Each model config has a unique name, like openai-davinci.

To open the model config modal, click the ⚙️ button next to the name of the model config in preferences, or use ctrl+M | ⌘M.

custom endpoint

The model endpoint url for making post requests.

API key

API keys in model configs will be saved in your program and any .loomstate exports.

The API key for the model endpoint, if required.

custom request body

Loom's default way of constructing a request body is compatible with OpenAI and AI21 models, but not necessarily other endpoints.

The custom request body is a string in JSON format, like this example:

use custom request body

A boolean variable which determines whether to use a custom request body. If unchecked, Loom will use its default request body format.

prompt field name

Name of the field in the request body that contains the prompt. Common values are prompt, text, or string.

This allows Loom to automatically add the prompt field to the request body each time the model is called, which is necessary because the value of the prompt can be different each time.

# completions field name

Name of the field in the request body that specifies how many parallel completions to generate.

completions

Model endpoints may also return completions in different formats.

completions contain prompt

choices field name

importing and exporting model configs

If you've set the API key field, the downloaded model config will contain your API key.

Model configs can be imported and exported as json files.

Last updated